AUSTIN, Texas, Nov. 16, 2008 - More supercomputers than ever are using Intel Corporation processors, according to the latest TOP500 list. The high performance computing (HPC) community is especially enthusiastic about quad-core Intel® Xeon® processors which are driving the research and analytical capabilities of more than half the systems on the list. The 32nd edition of the TOP500 list shows that 379 of the world's top 500 systems, including the third-fastest system in the world, now have Intel inside. According to the list, Intel is powering 49 systems in the top 100. Systems using Intel® Xeon® quad-core processors dominate the list, holding 288 spots. Using reinvented high-k metal gate transistors, Intel's year-old quad-core 45nm Intel® Xeon® processor 5400 series is used in 222 systems, including 32 powered by low voltage variants. Intel-based super computing platforms are playing a pivotal role in a number of research areas, from improving the safety of space exploration to forecasting global climate conditions. More "mainstream" industries, such as financial services and health care, are also using Intel-based systems to achieve faster, more accurate results, to speed the pace of innovation and improve competitive advantage. In addition to hardware, Intel is delivering to the HPC community a wide range of software tools, including compilers and MPI libraries, which help customers maximize multicore processing and improve the efficiency of clustered solutions. Approximately 75 percent of systems in the Top500 are using Intel software tools. "We're proud that Intel processors and software tools are playing a significant role in driving the world's most important scientific research and advancements," said Kirk Skaugen, vice president and general manager of Intel's Server Platforms Group. "With our multi-core innovation powering so many systems on the TOP500, it's clear that Intel is committed to pushing the boundaries of supercomputing." Over the past year, Intel has gained significant momentum in high-performance computing, signaled by major collaborations with Cray Supercomputer and NASA. Intel and Cray plan to develop a range of HPC systems and technologies driven by multi-core processing and advanced interconnects. Meanwhile, Intel, SGI and NASA are collaborating on Pleiades, a super computing project which is the No. 3 system on the list, and will enable groundbreaking scientific discovery with a goal of reaching 1 PetaFLOPS in 2009, and 10 PetaFLOPS (or 10,000 trillion operations per second) by 2012. The semi-annual TOP500 list of supercomputers is the work of Hans Meuer of the University of Mannheim, Erich Strohmaier and Horst Simon of the U.S. Department of Energy's National Energy Research Scientific Computing Center, and Jack Dongarra of the University of Tennessee. The complete report is available at www.top500.org.

As part of its efforts to offer consumers an unparalleled technical support experience, HP today announced a series of new and updated programs designed to help consumers get answers to questions quickly and effectively online. A revamped global peer-to-peer online support forum allows members to connect with one another to exchange insights and tips, and get answers to each other’s questions regarding their HP consumer products. The updated HP Customer Care website makes it easier for more than 20 million monthly visitors to find the information they need to solve issues. Online classes, offered at no charge, help customers with subjects like migrating to Windows Vista®, safe wireless computing and digital scrapbooking.(1) A series of online videos provides tips and tutorials on topics ranging from connecting dual monitors to configuring a TV tuner on a PC. The new and updated programs reflect changes in how HP customers are sharing information and communicating online. According to Forrester Research, three out of four online adults in the United States now use social tools to connect with each other, compared with just 56 percent in 2007.(2) Traffic to the HP Customer Care site increased 18 percent from January to October 2007 compared to the same period in 2008 as more consumers go online for support. The online community initiative is the result of recent investments by HP to deliver superior consumer technical support worldwide. Internal HP surveys show these investments are making an impact – satisfaction with HP consumer support improved 30 percent from October 2007 to October 2008 with the launch of a new customer support infrastructure, new call centers and agent training programs in early 2008. “These programs represent a growing evolution in consumer support,” said Tara Bunch, vice president, Global Customer Support Operations, HP. “Providing a way for customers to assist each other through a community and get better support online helps us achieve our goal of improving customer satisfaction worldwide.” HP consumer support forumLeading these online support programs is the newly designed consumer support forum where community members can share ideas, ask questions and help other members with solutions. The forum is divided into different categories based on broad topics such as operating systems or wireless networking for notebooks. Community members can personalize their HP experiences by tagging or bookmarking key boards, threads and messages, or by adding RSS feeds to keep track of new posts on key topics. HP plans to add additional components to the consumer support forum over the next several months, including blogs for technical gurus and community members, online seminars with moderated questions and answers, and wikis to enhance the support library with new content. HP will be actively monitoring the forums to help identify common support issues and gain customer feedback and insights. If customers can’t find solutions to their problems, assistance is available directly from HP by phone, email and real-time chat. The HP consumer support forums are available worldwide in English and will be rolling out in additional languages in the future. The HP consumer support forums are available now at www.hp.com/support/consumer-forum. HP Customer CareThe HP Customer Care site has been revised and updated to make it easier for consumers to locate and download product drivers or find additional information about HP products. The site has more than 20 million unique visitor sessions per month and the updates were rolled out worldwide in more than 22 languages. Support pages have been simplified and modernized to create a customer-friendly design and flow. The improved HP Customer Care site is available at www.hp.com/support. Free online classes(1)HP is launching a new series of free online classes to aid consumers with a number of subjects from migrating to Windows Vista to photo restoration basics. In a recent HP survey of customers that completed a class, more than 93 percent said they would take another course and more than 92 percent said they would recommend classes to a friend. The instructor-led classes have message boards that allow students to communicate with an instructor and each other. More information about free online classes is available at www.hp.com/go/freeclasses. HP how-to videos HP also has produced more than 45 video tutorials, which are posted online at HP.com and on a number of video sharing sites. The initial series of videos, titled “Know Your PC,” centers on tips for learning how to use the new HP TouchSmart PC. Additional videos with tips on topics such as connecting dual monitors and retrieving files from floppy disks are available now and more will be released in coming months. HP how-to videos can be accessed at www.hp.com/products/howtovideos.

HP today announced the industry’s first convertible notebook PC with multi-touch technology designed specifically for consumers. Building upon the touch innovation HP developed for its TouchSmart desktop PCs, the HP TouchSmart tx2 Notebook PC was developed for people on the go who value having their digital content at their fingertips – literally. The enhanced HP MediaSmart digital entertainment software suite on the tx2 allows users to more naturally select, organize and manipulate digital files such as photos, music, video and web content by simply touching the screen. “Breezing through websites and enjoying photos or video at the tap, whisk or flick of a finger is an entirely new way to enjoy digital content on a notebook PC,” said Ted Clark, senior vice president and general manager, Notebook Global Business Unit, Personal Systems Group, HP. “With the introduction of the TouchSmart tx2, HP is providing users an easier, more natural way to interact with their PCs, and furthering touch innovation.” The tx2 is the latest result of HP’s 25 years of touch technology experience, which began with the introduction of the HP-150, a touch screen PC that was well ahead of its time, in 1983. Digital media powerhouseThe tx2 gives customers the choice to set aside the keyboard and mouse in favor of a more natural user interface – the fingertip. HP’s multi-touch display delivers quick and easy access to information, entertainment and other social media. The tx2 recognizes simultaneous input from more than one finger using “capacitive multi-touch technology,” which enables the use of gestures such as pinch, rotate, arc, flick, press and drag, and single and double tap. The convertible design with a twist hinge allows consumers to enjoy the TouchSmart in three modes: PC, display and tablet. With a rechargeable digital ink pen, users can turn the tx2 into a tablet PC to write, sketch, draw, take notes or graph right onto the screen – and then automatically convert handwriting into typed text. Starting at less than 4.5 pounds, the tx2 possesses a 12.1-inch diagonal BrightView LED display and an HP Imprint “Reaction” design. The tx2 notebook’s HP MediaSmart software lets customers enjoy photos, listen to music and watch Internet(1) TV or movies in high-definition.(2) The software is optimized for multi-touch input while also making it simple to search digital content. In an effort to provide consumers with rich content through the Internet, HP has expanded its partnership with MTV Networks (MTVN) by offering video content from 10 television channels and online brands within MediaSmart’s TV module. Beginning in December, users can enjoy all the best content from Nickelodeon, the No. 1 entertainment brand for kids; MTV, the premier destination for music and youth culture programming; and COMEDY CENTRAL, the only all-comedy network and the No. 1 network in primetime for men ages 18 to 24. MTVN also plans to make content from Atom, CMT, GameTrailers, Logo, Spike, The N and VH1 available within MediaSmart’s TV module. The MediaSmart software was first brought to HP HDX notebook PCs in September, using the interface first popularized on HP TouchSmart PCs. HP plans to include the software in the tx2 and all future HP consumer notebook PCs. Powered by the AMD Turion™ X2 Ultra Dual-Core Mobile Processor or AMD Turion X2 Dual-Core Mobile Processor(3) and built on Windows® Vista Home Premium, the tx2 will be made available worldwide in an array of configure-to-order options. Additional features, accessories and service The HP TouchSmart tx2 series is ENERGY STAR® qualified and EPEAT™ Silver registered. Mercury-free LED display panels are included on the tx2 as part of HP’s ongoing commitment to reduce its impact on the environment. The HP tx Series Notebook Stand elevates the tx2, enhancing its usability while stationary, including making it possible to put the PC in an upright position for full interactivity with the touch screen. HP Webcam with Integrated Microphones allows users to see brighter, cleaner images when chatting over an Internet(1) connection. The tx2 offers access to a variety of self-help tools built in and online. It also is supported by HP Total Care, which enables consumers to reach support agents 24/7 by phone, email or real-time chat. Pricing and availabilityThe HP TouchSmart tx2 is available for ordering today in the United States at www.hpdirect.com with a starting U.S. price of $1,149.(4) More information about the tx2 is available at www.hp.com/go/touchsmarttx2.

HP today announced breakthrough networking, storage and server technologies that reduce costs, increase bandwidth flexibility and improve overall performance of virtual server environments. The HP Virtual Connect Flex-10 Ethernet module, a direct connect storage bundle for HP BladeSystem, and the HP ProLiant DL385 G5p server are among HP’s offerings that are helping customers efficiently deploy their virtualized infrastructures. While a growing number of companies deploy server virtualization to gain operational savings within their technology infrastructures, the cost of networking virtual servers continues to climb – for example, a typical server that hosts virtual machines requires six network connections.(1) To reap the benefits of their virtualized environment, companies are finding it necessary to invest in additional networking equipment, including network expansion cards, switches and cables. As an example, customers must purchase expensive network switches in either one Gigabit (Gb) or 10Gb increments to meet the increased bandwidth required for additional virtual server workloads. HP’s new Virtual Connect Flex-10 Ethernet module is the industry’s first interconnect technology that can allocate the bandwidth of a 10Gb Ethernet network port across four network interface card (NIC) connections. This increase in bandwidth flexibility eliminates the need for additional network hardware equipment. As a result, customers deploying virtual machines and utilizing Virtual Connect Flex-10 can realize savings of up to 55 percent in network equipment costs.(2) Virtual Connect Flex-10 can save 240 watts of power per HP BladeSystem enclosure – or 3,150 kilowatt hours per year – compared to existing networking technologies.(3) “Customers looking to eliminate the common obstacles of networking costs and bandwidth flexibility should look no further than HP,” said Mark Potter, vice president and general manager, BladeSystem, HP. “These technologies break down the barriers of virtualized networks, giving customers the greatest return on their investments.” Industry-leading cost benefits and four-to-one network consolidationHP Virtual Connect Flex-10 distributes the capacity of a 10Gb Ethernet port into four connections, and enables customers to assign different bandwidth requirements to each connection. Optimizing bandwidth based on application workload requirements enables customers to leverage their 10Gb investments across multiple connections, supporting virtual machine environments and other network intensive applications. This reduces overall network costs and power usage by provisioning network bandwidth more efficiently. The recently announced HP ProLiant BL495c virtualization blade includes built-in Virtual Connect Flex-10 functionality that enables it to support up to 24 NIC connections. With increased network bandwidth and memory capacity, the BL495c can accommodate more virtual servers than other competitive blade server offerings on the market.(4) Existing HP ProLiant c-Class blade customers can upgrade to Virtual Connect Flex-10 with the new HP NC532m Flex-10 expansion card. Simple, cost-effective storage expansion for HP BladeSystem customersHP’s new direct connect storage bundle for HP BladeSystem includes two HP StorageWorks 3Gb serial attached SCSI (SAS) BL switches and an MSA2000sa storage array. Traditionally, BladeSystem server administrators have had limited direct-attach or shared storage options and have had to rely on personnel with specialized knowledge to build a storage area network (SAN) based solution. This new low-cost, reliable storage option allows server administrators to easily deploy scalable shared SAS storage without the costs and complexity SANs require. By simply purchasing additional MSA2000sa arrays, customers can deploy up to 192 terabytes of external shared storage directly connected to an HP BladeSystem enclosure. The combination of the HP ProLiant BL495c virtualization blade server, Virtual Connect Flex-10 modules and the shared SAS storage bundle reduces the cost per virtual machine by more than 50 percent when compared to competitive solutions.(5) HP has enhanced its Virtual Connect 4Gb Fibre Channel module to allocate storage resources on a per virtual machine basis. This further simplifies storage and virtualization deployments for Fibre Channel storage customers. Customers can assign up to 128 separate SAN volumes per server blade for greater performance and flexibility. Innovative server design removes bottlenecks to virtual server performance

The new HP ProLiant DL385 G5p is a rack-based server optimized for virtualization. It offers up to 6 terabytes of internal storage as well as double the memory and a 67-percent improvement in energy efficiency when compared to previous generations.(6) Based on the new AMD Opteron™ 2300 Series Quad-Core processor, the DL385 G5p improves application performance and expands support for virtual machines.

Expanding to assess readiness for Microsoft Online Services, SQL Server 2008, Forefront Client Security, and Network Access Protection  Many of you are in the midst of an IT migration or upgrade planning but do not know with 100% certainty what computers are in your IT environment or what applications have been deployed. The Microsoft Assessment and Planning (MAP) Toolkit 3.2 makes it easier for customers and partners like you to quickly identify what servers, workstations, and network devices are in your IT environment. MAP also provides specific and actionable IT proposals and reports to help you get the most value out of Microsoft products and infrastructure. Today, Brad Anderson, General Manager of the Management and Solutions Division at Microsoft, announced the RTM release of the MAP Toolkit 3.2 at the TechEd EMEA 2008 in Barcelona. Over 510,000 Microsoft customers and partners have already downloaded and used MAP and its prior versions including Costco Wholesale Corporation, Continental Airlines, and Banque de Luxembourg. Introducing Microsoft Assessment and Planning Toolkit Microsoft Assessment and Planning (MAP)Toolkit is a scalable and agent-less assessment platform designed to make it easier for you to adopt the latest Microsoft technologies. In this version, MAP has expanded its assessment capabilities to include SQL Server 2008, Forefront/NAP, and Microsoft Online Services migration, as well as providing a Power Savings assessment to help you “go green.” In summary, MAP Toolkit 3.2 assessment areas now include: • SQL Server Database Instance Discovery (NEW!) • Microsoft Online Services Needs Assessment and Survey (NEW!) • Forefront Client Security/NAP Readiness Assessment (NEW!) • Power Savings Calculator (NEW!) • Virtualization Infrastructure Assessment (e.g. reporting the mapping of hosts by guests) (NEW!) • Windows Server 2008 Hardware Assessment • Server Consolidation Reports and Proposals (Hyper-V or Virtual Server 2005 R2) • Windows Vista and Microsoft Office 2007 Hardware Assessment • Desktop Security Assessment to determine if desktops have anti-virus and anti-malware programs installed and up-to-date, or if the Windows Firewall is turned on Toolkit Features The Microsoft Assessment and Planning Toolkit performs key functions that include hardware and device inventory, compatibility analysis, and readiness reporting. MAP utilizes an enterprise-scale, agent-less architecture that enables you to inventory your servers, desktops, applications, and network devices without installing any software agents on each machine being assessed. This tool can discover all computers within Active Directory, and most importantly, non-IT managed machines such as workgroup members. Additionally, MAP can generate localized desktop readiness reports in seven languages including North American English, German, French, Japanese, Korean, Spanish, and Portuguese. Benefits to You (Customers and Partners) Fast and Agent-less. MAP provides secure network-wide assessment of environments of up to 100,000 computers in a matter of hours instead of days, all without deploying any software agents on each inventoried machine. Saves Pre-Sales and Planning Time. For those of you who are IT consultants and Microsoft Partners, you know that a detailed network inventory and assessment of servers and desktops often takes days of manual labor. With MAP, you can now drastically reduce the time it takes for the same inventory to a matter of hours; allowing you more time to focus your efforts on critical pre-sales engagement tasks. For those of you who are IT professionals, MAP can significantly reduce the time it takes to gather the information necessary to make the business case for client and server migration, as well as for your upcoming virtualization projects. Actionable Recommendations and Reporting. MAP offers valuable inventory and readiness assessment reports with specific upgrade recommendations and virtualization candidate reports that make it easier for IT migration and deployment projects to get off the ground and running. Coverage from Desktops to Servers. MAP provides technology assessment and planning recommendations for many Microsoft desktop and server products including SQL Server 2008, Forefront Client Security/NAP, Microsoft Online Services, Windows Server 2008, Hyper-V, Virtual Server 2005 R2, Windows Vista, 2007 Microsoft Office, Microsoft Application Virtualization (or App-V), System Center Virtual Machine Manager 2007, and more. Next Steps Try the Microsoft Assessment and Planning Toolkit 3.2 RTM version now! Tell a friend and blog about it! Baldwin Ng Sr. Product Manager, Microsoft Solution Accelerators Team Reference : http://blogs.technet.com/mapblog/archive/2008/11/03/rtm-news-microsoft-assessment-and-planning-toolkit-3-2-now-available.aspx

When the computer industry buys into a buzzword, it's like getting a pop song stuck in your head. It's all you hear. Worse, the same half-dozen questions about the hyped trend are incessantly paraded out, with responses that succeed mainly in revealing how poorly understood the buzzword actually is. These days, the hottest buzzphrase is "cloud computing," and for John Willis, a systems management consultant and author of an IT management and cloud blog , the most annoying question is this: Will enterprises embrace this style of computing? "It's not a binary question," he asserts. "There will be things for the enterprise that will completely make sense and things that won't." The better question, he says, is whether you understand the various offerings and architectures that fit under that umbrella term, the scenarios where one or more of those offerings would work, and the benefits and downsides of using them. Even cloud users and proponents don't always recognize the downsides and thus don't prepare for what could go wrong, says Dave Methvin, chief technology officer at PC Pitstop LLC, which uses Amazon.com Inc.'s S3 cloud-based storage system and Google Apps . "They're trusting in the cloud too much and don't realize what the implications are," he says. With that as prologue, here are seven turbulent areas where current and potential users of cloud computing need to be particularly wary. Costs, Part I: Cloud Infrastructure ProvidersWhen Brad Jefferson first founded Animoto Productions, a Web service that enables people to turn images and music into high-production video, he chose a Web hosting provider for the company's processing needs. Looking out over the horizon, however, Jefferson could see that the provider wouldn't be able to meet anticipated peak processing requirements. But rather than investing in in-house servers and staff, Jefferson turned to Amazon's Elastic Compute Cloud, a Web service known as EC2 that provides resizable computing capacity in the cloud, and RightScale Inc., which provides system management for users of Web-based services such as EC2. With EC2, companies pay only for the server capacity they use, and they obtain and configure capacity over the Web. "This is a capital-intensive business," Jefferson said in a podcast interview with Willis. "We could either go the venture capital route and give away a lot of equity or go to Amazon and pay by the drink." His decision was validated in April, when usage spiked from 50 EC2 servers to 5,000 in one week. Jefferson says he never could have anticipated such needs. Even if he had, it would have cost millions to build the type of infrastructure that could have handled that spike. And investing in that infrastructure would have been overkill, since that capacity isn't needed all the time, he says. But paying by the drink might make less economic sense once an application is used at a consistent level, Willis says. In fact, Jefferson says he might consider a hybrid approach when he gets a better sense of Animoto's usage patterns. In-house servers could take care of Animoto's ongoing, persistent requirements, and anything over that could be handled by the cloud. Costs, Part II: Cloud Storage ProvidersStorage in the cloud is another hot topic, but it's important to closely evaluate the costs, says George Crump, founder of Storage Switzerland LLC, an analyst firm that focuses on the virtualization and storage marketplaces. At about 25 cents per gigabyte per month, cloud-based storage systems look like a huge bargain, Crump says. But although Crump is a proponent of cloud storage, the current cost models don't reflect how storage really works, he says. That's because traditional internal storage systems are designed to reduce storage costs over the life of the data by moving older and less-accessed data to less-expensive media, such as slower disk, tape or optical systems. But today, cloud companies essentially charge the same amount "from Day One to Day 700," Crump says. Amazon's formula for calculating monthly rates for its S3 cloud storage service is based on the amount of data being stored, the number of access requests made and the number of data transfers, according to Methvin. The more you do, the more you pay. Crump says that with the constant decline of storage media costs, it's not economical to store data in the cloud over a long period of time. Cloud storage vendors need to create a different pricing model, he says. One idea is to move data that hasn't been accessed in, say, six months to a slower form of media and charge less for this storage. Users would also need to agree to lower service levels on the older data. "They might charge you $200 for 64G the first year; and the next year, instead of your having to buy more storage, they'd ask permission to archive 32G of the data and charge maybe 4 cents per gigabyte," Crump explains. To further drive down their own costs and users' monthly fees, providers could store older data on systems that can power down or off when not in use, Crump says. Sudden Code ChangesWith cloud computing, companies have little to no control over when an application service provider decides to make a code change. This can wreak havoc when the code isn't thoroughly tested and doesn't work with all browsers. That's what happened to users of Los Angeles-based SiteMeter Inc.'s Web traffic analysis system this summer. SiteMeter is a software-as-a-service-based (SaaS) operation that offers an application that works by injecting scripts into the HTML code of Web pages that users want tracked. In July, the company released code that caused some problems. Any visitor using Internet Explorer to view Web pages with embedded SiteMeter code got an error message. When users began to complain, Web site owners weren't immediately sure where the problem was. "If it were your own company pushing out live code and a problem occurred, you'd make the connection," Methvin explains. "But in this situation, the people using the cloud service started having users complaining, and it was a couple of hours later when they said, 'Maybe it's SiteMeter.' And sure enough, when they took the code out, it stopped happening." The problem with the new code was greatly magnified because something had changed in the cloud without the users' knowledge. "There was no clear audit trail that the average user of SiteMeter could see and say, 'Ah, they updated the code,' " Methvin says. Soon after, SiteMeter unexpectedly upgraded its system, quickly drawing the ire of users such as Michael van der Galien, editor of PoliGazette , a Web-based news and opinion site. The new version was "frustratingly slow and impractical," van der Galien says on his blog. In addition, he says, current users had to provide a special code to reactivate their accounts, which caused additional frustration. Negative reaction was so immediate and intense that SiteMeter quickly retreated to its old system, much to the relief of van der Galien and hundreds of other users. "Imagine Microsoft saying, 'As of this date, Word 2003 will cease to exist, and we'll be switching to 2007,' " Methvin says. "Users would all get confused and swamp the help desk, and that's kind of what happened." Over time, he says, companies such as SiteMeter will learn to use beta programs, announce changes in advance, run systems in parallel and take other measures when making changes. Meanwhile, let the buyer beware. Service DisruptionsGiven the much-discussed outages of Amazon S3 , Google's Gmail and Apple's MobileMe , it's clear that cloud users need to prepare for service disruptions. For starters, they should demand that service providers notify them of current and even potential outages. "You don't want to be caught by surprise," says Methvin, who uses both S3 and Gmail. Some vendors have relied on passive notification approaches, such as their own blogs, he says, but they're becoming more proactive. For example, some vendors are providing a status page where users can monitor problems or subscribe to RSS feeds or cell phone alerts that notify them when there's trouble. "If there's a problem, the cloud service should give you feedback as to what's wrong and how to fix it," Methvin says. Users should also create contingency plans with outages in mind. At PC Pitstop, for instance, an S3 outage would mean users couldn't purchase products on its site, since it relies on cloud storage for downloads. That's why Methvin created a fallback option. If S3 goes down, products can be downloaded from the company's own servers. PC Pitstop doesn't have a backup plan for Google Apps, but Methvin reasons that with all of its resources, Google would be able to get a system such as e-mail up and running more quickly than his own staffers could if they had to manage a complex system like Microsoft Exchange. "You lose a little bit of control, but it's not necessarily the kind of control you want to have," he says. Overall, it's important to understand your vendor's fail-over strategy and develop one for yourself. For instance, Palo Alto Software Inc. offers a cloud-based e-mail system that uses a caching strategy to enable continuous use during an outage. Called Email Center Pro, the system relies on S3 for primary storage, but it's designed so that if S3 goes down, users can still view locally cached copies of recent e-mails. Forrester Research Inc. advises customers to ask whether the cloud service provider has geographically dispersed redundancy built into its architecture and how long it would take to get service running on backup. Others advise prospective users to discuss service-level agreements with vendors and arrange for outage compensation. Many vendors reimburse customers for lost service. Amazon.com, for example, applies a 10% credit if S3 availability dips below 99.9% in a month. Vendor ExpertiseOne of the biggest enticements of cloud computing is the promise of IT without the IT staff. However, veteran cloud users are adamant that this is not what you get. In fact, since many cloud vendors are new companies, their expertise -- especially with enterprise-level needs -- can be thin, says Rene Bonvanie , senior vice president at Serena Software Inc. It's essential to supplement providers' skills with those of your own in-house staff, he adds. "The reality is that most of the companies operating these services are not nearly as experienced as we hoped they would be," Bonvanie says. The inexperience shows up in application stability, especially when users need to integrate applications for functions like cross-application customer reporting, he says. Serena itself provides a cloud-based application life-cycle management system, and it has decided to run most of its own business in the cloud as well. It uses a suite of office productivity applications from Google, a marketing automation application from MarketBright Inc. and an on-demand billing system from Aria Systems Inc. So far, it has pushed its sales and marketing automation, payroll, intranet management, collaboration software and content management systems to the cloud. The only noncloud application is SAP, for which Serena outsourced management to an offshore firm. According to Bonvanie, "the elimination of labor associated with cloud computing is greatly exaggerated." The onus is still on the cloud consumer when it comes to integration. "Not only are you dealing with more moving parts, but they're not always as stable as you might think," he says. "Today, there's no complete suite of SaaS applications, no equivalent of Oracle or R/3, and I don't think there ever will be," Bonvanie says. "Therefore, we in IT get a few more things pushed to us that are, quite honestly, not trivial." Global ConcernsCloud vendors today have a U.S.-centric view of providing services, and they need to adjust to the response-time needs of users around the world, says Reuven Cohen , founder and chief technologist at Enomaly Inc., a cloud infrastructure provider. This means ensuring that the application performs as well for users in, say, London as it does for those in Cincinnati. Bonvanie agrees. Some cloud vendors "forget that we're more distributed than they are," he says. For instance, San Bruno, Calif.-based MarketBright's cloud-based marketing application works great for Serena's marketing department in Redwood City, Calif., but performance diminished when personnel in Australia and India began using it. "People should investigate whether the vendor has optimized the application to work well around the world," Bonvanie says. "Don't just do an evaluation a few miles from where the hardware sits." Worldwide optimization can be accomplished either by situating servers globally or by relying on a Web application acceleration service, also called a content delivery network, such as that of Akamai Technologies Inc. These systems work across the Internet to improve performance, scalability and cost efficiency for users. Of course, situating servers globally can raise thorny geopolitical issues, Willis points out. Although it would be great to be able to load-balance application servers on demand in the Pacific Rim, Russia, China or Australia, the industry "isn't even close to that yet," he says. "We haven't even started that whole geopolitical discussion." In fact, Cohen points out, some users outside of the U.S. are wary of hosting data on servers in this country. They cite the USA Patriot Act, which increases the ability of law enforcement agencies to search telephone, e-mail communications, medical and financial records and eases restrictions on foreign-intelligence-gathering within the U.S. The Canadian government, for instance, prohibits the export of certain personal data to the U.S. "It's hazy and not well defined," Cohen says of the act. "People wonder, 'Can they just go in and take [the data] at a moment's notice, with no notification beforehand?' That's a whole second set of problems to be addressed." Non-native ApplicationsSome applications offered on SaaS platforms were originally designed for SaaS; others were rebuilt to work that way. For example, Bonvanie says, there's a very big difference between applications like WebEx and Salesforce.com, which were designed as SaaS offerings, and Aria's billing platform, which was not. "It's highly complex and fits in the cloud, but its origins are not cloud-based," he says. "If the offering was not born [in] but moved to the cloud, you deal with a different set of restrictions as far as how you can change it." Whatever "cloud computing" is to you -- an annoying buzzphrase or a vehicle that might power your company into the future -- it's essential to get to know what it really means, how it fits into your computing architecture and what storms you may encounter en route to the cloud. Computerworld contributing writer in Newton, Mass. Contact her at marybrandel@verizon.net . This version of the story originally appeared in Computerworld s print edition. Reference : http://www.pcworld.com/article/153219/cloud_computing_gotcha.html?tk=rss_news

Microsoft unleashed a new cloud computing ecosystem at its recent Professional Developers Conference event, even though most observers chose to focus on more obvious, though less important, aspects of its announcement. Essentially, Microsoft is going to create a Windows-based cloud infrastructure. Many of the details of its ultimate offering are still unclear, and it chose to discuss Azure primarily in terms of how it enables Microsoft-offered hosting (dubbed Azure Services Platform) of Microsoft applications like Exchange, Live Services, .NET Services, SQL Server, SharePoint, and Dynamics CRM, and SQL Server. I'm surprised that so many of the commentators (and presumably Microsoft itself) chose to discuss this offering in terms of Microsoft offering SaaS version of its products. While I think this offering approach is interesting, it falls short of revolutionary; by contrast, the revolutionary aspects of Azure have barely been touched on by all the commentary about the new offering. Let me offer my take on what's really interesting about Azure: Microsoft offering hosted versions (aka SaaS) of its applications is interesting; however, plenty of people already offer hosted versions of its server products (e.g., Exchange, SharePoint). So the mere fact of these apps being available in "the cloud" is nothing new. However, Microsoft has some interesting flexibility here. Other businesses offering hosted versions of these apps have to obtain licenses for them and pay some amount related to the list price of the product. By contrast, Microsoft, as the producer of the products, can -- should it choose -- price its hosted version nearer the marginal license cost of an instance, i.e., near-zero. Of course, Microsoft still has to pay for the infrastructure, operations, etc., but it can clearly, should it choose, obtain a price advantage compated to competitive offerings. This leads us to the next point: infrastructure pricing. Microsoft, based on the cash flow from its packaged software offerings, clearly has a capital cost advantage compared to its competitors for hosting Microsoft applications. And, based on its experience in hosting Hotmai, Microsoft clearly has operational experience capable of scaling an infrastructure cost-effectively. Added to its ability to price at the margin for software licenses, this obviously provides Microsoft with the ability to be the low-cost provider of Microsoft application hosting. And this advantage doesn't even include the (dare I say it) synergies available to it based on its common ownership of the cloud offering and the applications themselves. However, focusing on these aspects of the offering is missing the forest for the trees, so to speak; perhaps a better way to say it is that it focuses on the low-hanging fruit without realizing there is much more-and sweeter-fruit available just slightly further beyond the low-hanging stuff. And that's where Azure gets interesting. First and foremost, Azure offers a way for Microsoft-based applications to be deployed in the cloud. All of the cloud offerings thus far have been Linux-oriented and required Linux-oriented skills. This has been fine for the first generation of cloud developers: they're early adopters most likely to have advanced skills. There is an enormous base of mainstream developers with Windows-based skills, though; corporations are stuffed with Windows developers. Before Azure, these developers were blocked from developing cloud-based applications. With Azure, they can participate in the cloud-which is why other elements of the announcement relating to .NET and SQL Server are so important. These capabilities of Azure will accelerate cloud adoption by enterprises. So Azure's support of the Windows development infrastructure is a big deal. Cloud Economics Meets Windows InfrastructureBut even that isn't as important as what else Azure will provide: the mix of cloud economics and innovation with Windows infrastructure. One can argue that other providers (e.g., Amazon Web Services) could offer the same capability of hosting Windows infrastructure capabilities, so Azure, on first blush might not seem so important. However, these offerings would face the same issue alluded to earlier, namely, Microsoft's competitive advantage available through marginal license pricing (of course, that might be subject to antitrust issues, so the advantage actually might be moot). However, and this is not in doubt, Microsoft undoubtedly has an unanswerable advantage in that it can extend its components' architectures-at least theoretically-to be better suited to cloud infrastructure. That is to say, Microsoft could, say, take .NET, which today is primarily focused on operating on a single server, and extend it to transparently operate on a farm of servers, scaling up and down depending upon load. And this is where Azure could-could-get revolutionary. Marrying today's widely distributed Microsoft skill base with a cloud-capable architecture based on established Microsoft component development approaches, APIs, etc., could unleash a wave of innovation at least as great as the innovation already seen in EC2 (see this previous blog posting for some insight about today's cloud innovation). In fact, given the relative skill distribution of Linux vs. Windows, one could expect the Azure wave to be even larger. Of course, this is all future tense, and by no means certain. Microsoft has, in the past, announced many, many initiatives that ultimately fizzled out. More challenging, perhaps, is how a company with large, established revenue streams will nurture a new offering that might clash with those established streams. This is Clayton Christensen territory. It can be all-too-tempting to skew a new offering to "better integrate" with current successful products to the detriment of the newcomer. Microsoft has a mixed track record in this regard. I won't make a prediction about how it will turn out, but it will be a real challenge. However, cloud computing is, to my mind, at least, too important to fail at. Cloud computing is at least as important as the move to distributed processing. If you track what distributed processing has meant to business and society-a computing on every desk and in every home, etc., etc.-you begin to get an appreciation for why Microsoft has to successfully address the cloud. Azure is a bet-the-company initiative-and there's a reason they're called bet-the-company: they're too important to fail at. So Microsoft needs to get Azure right. Bernard Golden is CEO of consulting firm HyperStratus, which specializes in virtualization, cloud computing and related issues. He is also the author of "Virtualization for Dummies," the best-selling book on virtualization to date. Reference : http://www.pcworld.com/article/153216/ms_windows_azure.html?tk=rss_news

Reader Dave Bradley is trading up, but would like to take his Boot Camp partition along for the ride. He writes: I'm planning to replace the hard drive in my MacBook Pro with a higher-capacity drive. On that MacBook Pro I have both a partition for my Mac stuff and a Boot Camp partition that has Windows on it. I'm going to use Carbon Copy Cloner to clone the Mac partition to an external drive I have and then restore it to the new drive, but how do I make a copy of the Boot Camp partition? I'll begin by saying that it's possible. I'll follow that by suggesting that unless you've spent days configuring Windows you might be better off with a fresh install of Boot Camp and Windows. I don't think I'm telling secrets out of school in saying that it takes Windows very little time to get completely junked up. Sometimes starting over is the best course. But if that means hours and hours of additional work, then cloning and restoring may be your preference. To do that, grab a copy of Two Canoes Software's free Winclone. I used it last week to perform an operation similar to the one you're about to undertake and it worked beautifully. Just launch Winclone, choose your Boot Camp partition from the Source menu, and click the Image button. As the button's name suggests, this creates an image of that partition and saves it on the Mac side of the drive. Now clone the Mac partition and then swap the drives. Once you've swapped the drives and restored the Mac side, launch Winclone on the new drive, click the Restore tab, drag the Boot Camp image into Winclone's Restore Image field, and click Restore. Winclone will create a new Boot Camp partition on your drive and restore its contents from the image you created earlier. Note that thanks to Microsoft's Draconian Windows activation scheme it's highly likely that you'll have to activate Windows again. When I did this, online activation was a bust as Microsoft believed that I was trying to exceed my activation limit (because Windows was tied to my old hard drive). Go immediately to phone activation, as telling the nice automated operator that you've installed Windows on only one computer seems to satisfy her to the point that she's willing to cough up the seemingly endless string of numbers that allow you to activate Windows. Reference : http://www.pcworld.com/article/153238/.html?tk=rss_news

Apple is likely to cut production of its hot-selling iPhone 3G handset by up to 40 percent during the current quarter, an analyst warned Monday, saying the expected change signals weaker demand for consumer electronics. But the prediction drew criticism from Apple observers, who said the situation isn't so grim. "That the firm's iPhone production plans are being revised lower suggests that the global macroeconomic weakness is impacting even high-end consumers, those that are more likely to buy Apple's expensive gadgets, and that no market segment will be spared in this global downturn," wrote Craig Berger, an analyst at FBR Capital Markets, an investment bank. The forecast is significantly more pessimistic than Berger's earlier prediction, announced last month, that Apple will cut its iPhone production by 10 percent. As a result of the expected production cut, Berger said key component suppliers, including Broadcom, Marvell, and Infineon, among others, will see lower revenue during the period. The pessimistic report drew flak from some Apple watchers, including Fortune's Philip Elmer-DeWitt, who panned the forecast in a blog post titled, "The Apple analyst who couldn't shoot straight." "Sounds pretty scary. But perhaps best taken with a grain of salt, given Berger's track record with Apple," he wrote. Elmer-DeWitt blasted Berger for previous calls on Apple and said he's not on a list of analysts who dialed into hear Apple's latest conference call with financial analysts. But the list Elmer-DeWitt cited, contained on a transcriptof the call prepared by SeekingAlpha, appears to list the financial analysts who asked questions during the call, and does not appear to be an exhaustive list of analysts listening to the call. Berger and Apple could not immediately reached for comment However, Apple sounded a note of caution last month when it released fiscal fourth-quarter results, citing an uncertain economic environment. "Looking ahead, visibility is low and forecasting is challenging, and as a result we are going to be prudent in predicting the December quarter," the company said in a press release. Reference : http://www.pcworld.com/article/153246/.html?tk=rss_news

The key to a successful Agile migration is having the change driven from within. The change needs to be driven by key players throughout the company. Once this team is created they will be evangelists to the entire company. The role of this group, which I call the Agile Core Team, is to learn as much as they can about Agile and use this knowledge to outline a custom Agile methodology for the company. The team will collaborate and reach consensus on new processes, then mentor project teams as they use the Agile techniques. This core team is powerful and influential for three reasons: 1. They are not a part of line management. There will be very few members from the management ranks but the majority of the team will be "doers." The people that actually design, build, create, and test the code. This will add to the credibility as the methodology is rolled out to the company. It is not a management initiative being forced upon everyone; it is coming from real people who will be a part of the project teams. 2. Since the team is composed of doers they actually know the ins and outs of developing in your environment. This is different than when consultants come in suggesting standard practices and disregarding the realities of a specific company. The Agile Core Team has experience with your company and they will use that experience to develop a methodology that knows what to keep and what to discard within the existing practices. 3. Remember our earlier discussion of awareness, buy-in, and ownership? What better way to create awareness than to have Agile Core Team members come from each functional area. Imagine a member being from quality and going back to the quality team and telling them what is going on with the new methodology, or a developer doing the same with the development team. Having team members from all areas will initialize awareness across the company. Many companies use outside consulting to get their methodology going. I have seen several companies choose to go with Agile methods such as Scrum, and then have a third party come in and train, design, and deploy the methodology. In my opinion this approach is not as effective as growing the methodology from within. Creating it from within the organization addresses all of the issues with ownership. It is hard to get a team to buy into a process that was forced upon them. Note that there are occasions when an organization is so dysfunctional that it needs to have a methodology forced upon it. This is the exception, not the norm. Obtain Team Members From All Areas Once you obtain executive support you can pursue creation of the Agile Core Team. Your sponsor will probably suggest managers for the team, but you need to remind him that part of the power and influence of the core team is they are "doers." You might also find yourself pursuing the best and brightest people from each area. People with a positive attitude and a pro-Agile mentality. People that are open minded to change. These would be excellent attributes to list on a job opening, but would they be reflective of your current employee mix, the people that you want to embrace the new methodology? Probably not. If your company is like most you probably have some mix of the following: Brilliant and collaborative people People that are brilliant but difficult to work with People who challenge ever initiative People who loathe change and avoid it at all costs You need to make sure the makeup of the core team is similar to the makeup of the company. This will help you obtain buy-in from all types when you begin roll-out. After determining types of folks for the team, you need to determine team size. A group large enough to capture a diverse set of perspectives but small enough to be, yes, "Agile." I suggest a number somewhere between 5 and 10 people. Note that if the team is larger you can still make progress when a team member is pulled for a production issue or is out due to vacation or illness. To give you a feel for creating your own team, let's return to Acme Media. We find that Wendy Johnson has obtained the CIO, Steve Winters, as the executive sponsor for the Agile migration. Amazingly Steve has asked to be on the core team and he says he can participate in the 6 hours per week requested of team members. Wendy and Steve have also identified their Agile Core Team and they have received approval from the managers of the people selected. Wendy and Steve worked hard to get a diverse group of people on the team to allow many perspectives to be considered in creation of the methodology. Their team member list is can be seen in Table 1. You will notice that members are from various functional areas and they all have different points of view on what a methodology should do, just like your team will. Just like Acme, you will need to get manager approval for the employees you select for your team. The managers will probably be looking for a time estimate from you. The first few weeks I like to see the core team meet twice a week for two hours each meeting. You can also assume each team member will get a couple of hours of Agile homework a week (researching existing development methods, etc.). A good number to give the managers is 6 hours a week for three months. The duration will decrease over the three months. You may see the weekly meetings reduced to one hour, but to be safe still ask for 6 hours per week for three months. Better to promise late and deliver early. After team selection you need to meet with the members and set expectations. This article is based on Becoming Agile... in an imperfect world by Greg Smith and Ahmed Sidky, to be published in February 2009. It is being reproduced here by permission from Manning Publications. Manning early access books and ebooks are sold exclusively through Manning. Visit the book's page for more information. Reference : http://www.pcworld.com/article/153213/core_dev_team_agile.html?tk=rss_news

As competition in the mobile phone market heats up, two companies with flagging momentum are teaming up to try to compete better with the market leaders. Microsoft and LG on Monday said that they plan to work closely together, collaborating on research and development, marketing, applications and services for converged mobile devices. The announcement came from Seoul, Korea, LG's base, during a trip there by Steve Ballmer, Microsoft's CEO. The collaboration is not a first for the companies. LG has already been making phones that run Windows Mobile. But the agreement could signal a stepped up effort by both companies to try to beat the competition. While LG has had some hot sellers, such as the Chocolate, it now faces challenges from new competitors Apple and Google which are generating more excitement. Google just two weeks ago introduced the first phone to run on its Android software, HTC's G1. Apple's iPhone continues to build momentum. Microsoft too has been affected by the new competitors. It has been working recently to try to position Windows Mobile, traditionally regarded as solely a business tool, as also useful for more personal applications. LG has traditionally focused on phones that would appeal most to mass market consumers. Both companies have slipped in rankings recently. A recent Gartner study showed that worldwide, Microsoft in the second quarter this year slipped into the third place among providers of smartphone operating systems, behind number one Symbian and number two ranked Research In Motion. Last year in the same quarter, Microsoft beat out RIM for the second position. A recent IDC study showed that LG during the third quarter this year slipped into the number-five position worldwide among mobile phone makers. In a statement, the companies said they expected that their agreement would create momentum and innovation in the industry. Reference : http://www.pcworld.com/article/153232/.html?tk=rss_news

Microsoft is clearly using Tech Ed IT Pro in Barcelona to start revealing details about new features in the upcoming releases of Windows 7 and Windows Server 2008. One of the first sessions after the openening keynote was about new networking features in Windows 7 and Windows Server 2008. The enhancements clearly focus on making Windows work better from outside the corporate network and in Branch office situations. Two prominent new features are: DirectAccess BranchCache Direct Access is Microsoft's implementation of what I have earlier referred to as DirectConnect. The technoligy enables user to access resources on the corporate network from a corporate system over the Internet without a VPN using IPv6 and IPSec. Steve Riley already did a demo of this technology at Tech Ed in Orlando. It will be implemented as a client in Windows 7 and as a role on Windows Server 2008 R2. BranchCache is a new caching technology that locally caches data that is retrieved over a WAN link using SMB or HTTP(S). This enables the next user that needs the same piece of information to retrieve the data without pulling all data over the line again. The demo's showed a substantial gain in speed. BranchCache is implemented in such a way that is preserves the security of the information. BranchCache can be implemented in two modes: BranchCache Distributed Cache BranchCache Hosted Cache BranchCache Distributed Cache provides a peer to peer mechanism for caching the data at the branch office. In this modes Windows 7 systems request for a local copy of the data at each other before pulling the data over the WAN link. Each time the data is fetched, the client checks with the originating server if the data has not changed and if the security settings allow access. BranchCache Distributed Cache only works for clients at the branch office that operate in the same subnet. With BranchCache Hosted Cache, a Windows Server 2008 R2 server is assigned at the branch office to hold a cached copy of data that is retrieved over the WAN link. This server is configured with the BranchCache role and assigned as the caching server with Group Policy. It will work as the caching server for all Windows 7 clients at the branch office not regarding their subnet location. The only downside I see in BranchCache is the fact that you need Windows Server 2008 R2/Windows 7 on both sides of the connection. Reference : http://www.xpworld.com/

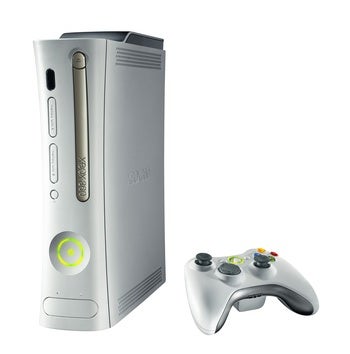

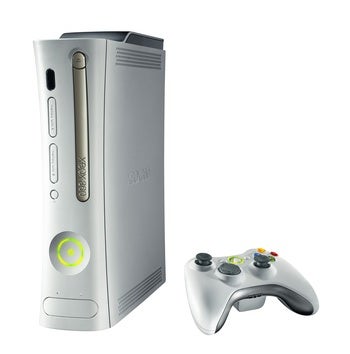

The Xbox 360 has been through quite the public crucible of late. In the last 12 months, it's added a brand new model, re-priced its peripherals, dropped the bottom line cost of all its configurations, and in just a few weeks, it'll completely switch out the face it offers to gamers when powered on (see my review of that change-up, parts one and two). Whatever you think of those changes, you can justifiably say 2008 has been an incredibly transformative one for a system that only launched three years ago. So it's not surprising to read that Microsoft's Stephen McGill told VideoGamer.com the price cuts are done, finished, fini, and that the company is "not dropping the price for many many years in the future." While McGill would technically be speaking on behalf of the UK market, Microsoft has tended to structure the 360's pricing internationally, so it's likely his statement applies universally. According to McGill:

I'm not going to speculate where it might go (the price of the Xbox 360) in five or ten years time, whatever. But we've obviously just reduced the price and that's because we can pass the costs reductions we have straight on to consumers.

...a lot of people have been waiting for it to become even more affordable and they're now seeing that benefit and buying it in their droves and we can now hopefully continue it through Christmas and beyond."

That makes sense for the added reasons that Microsoft is selling well against Sony's PS3. Sony's Ray Maguire also said recently that the PS3 won't be dropping so much as a penny this holiday season, so there's no real pressure for Microsoft to double its flanking maneuvers. That said, is it time for Sony to drop the PS3's price? Have a look at my thoughts on that touchy matter and decide for yourself. Reference : http://www.pcworld.com/article/153200/no_xbox_price_drop.html?tk=rss_news

Microsoft's latest security report shows that the number of new vulnerabilities found in its software was lower in first half of the year than the last half of 2007, with the Windows Vista OS proving more resistant to exploits than XP. Microsoft reported 77 vulnerabilities from January to June compared to 116 for the last six months of 2007, according to the company's fifth Security Intelligence Report. The decline is in line with the software industry as a whole, which saw a 19 percent decrease in vulnerability disclosures compared to the first half of 2007, Microsoft said. However, those vulnerabilities considered highly severe rose 13 percent. Exploit code was available for about a third of the 77 vulnerabilities; however, reliable exploit code is available for only eight of those 77. Other data shows that XP is attacked more frequently than Vista. In XP machines, Microsoft's own software contained 42 percent of the vulnerabilities attacked, while 58 percent were in third party software. For Vista machines, Microsoft's software had 6 percent of the vulnerabilities attacked, with third-party software containing 94 percent of the flaws. New security technologies such as address space randomization have led to fewer successful attacks against Vista, said Vinny Gullotto, general manager of Microsoft's malware protection center. "Moving onto Vista is clearly a safe bet," Gullotto said. "For us, it's a clear indicator that attacking Vista or trying to exploit Vista specifically is becoming much more difficult." The highest number of exploits were released for Windows 2000 and Windows Server 2003 operating systems, Microsoft said. Hackers appear to be increasingly targeting Internet surfers who speak Chinese. Microsoft found that 47 percent of browser-based exploits were executed against systems with Chinese set as the system language. The most popular browser-based exploit is for the MDAC (Microsoft Data Access Components) bug that was patched (MS06-014) by Microsoft in April 2006.Some 12.1 percent of all exploits encountered on the Internet targeted that flaw. The second most encountered exploit is one aimed at a vulnerability in the RealPlayer multimedia software, CVE-2007-5601. The two most commonly exploited vulnerabilities in Windows Vista concerned ActiveX controls that are commonly installed in China, Microsoft said. Gullotto said Microsoft is continuing to improve the Malicious Software Removal Tool (MSRT), a free but very basic security application that can remove some of the most common malware families. Last month, Microsoft added detection for "Antivirus XP," one of several questionable programs that warn users their PC is infected with malware, Gullotto said. The program badgers users to buy the software, which is of questionable utility. "Antivirus XP" is also very difficult to remove. Microsoft fielded some 1,000 calls a month about Antivirus XP on its PC Safety line, where users can call and ask security questions. Since the MSRT started automatically removing the program, calls concerning Antivirus XP dropped by half the first week, Gullotto said. Reference : http://www.pcworld.com/article/153193/windows_security.html?tk=rss_news

I wouldn't touch this with a ten foot pole, personally, but if you're an insufferably curious geek, it seems you can pull down a pirated version of Microsoft's New Xbox Experience. The NXE is Microsoft's impending total makeover for its Xbox 360 dashboard, which I recently previewed here and here. I say "early version" because I can confirm Microsoft's been releasing periodic updates to the NXE, so it's probably not the most recent version. Furthermore, Microsoft has a very simple means of tracking who's supposed to have access and who isn't. I won't say how, but use your imagination and it'll come to you. If you download the update and connect, they'll also know you're not a card-carrying club member, at which point, says Microsoft, it's bye bye Xbox Live for you. In case you think that's just grandstanding, Xbox Live director of programming Larry Hryb, aka "Major Nelson," Twittered the following on Saturday:

A little reminder: If you get your hands on NXE and you are NOT in the Preview Program. You won't be allowed on LIVE.

November 19th is just a few weeks away. Just wait. Besides, aren't Fable 2 and Fallout 3 enough?

Japanese electronics giant Panasonic has agreed to buy Sanyo Electric, the Nikkei business daily reported Monday. Panasonic and Sanyo will hold board meetings by the end of this week to approve the deal and a formal announcement could come as early as Friday, according to the report, which did not cite sources for the information. The resulting company will be Japan's largest electronics maker, the Nikkei said. The deal may come as a relief to Sanyo, which has been struggling to turn around its business for the past few years. The world's largest manufacturer of lithium-ion batteries posted its first profit in four years in its most recent fiscal year, which ended in March 2008. Sanyo also makes a range of electronics products including digital video cameras, as well as green energy devices such as solar cells. Reference : http://www.pcworld.com/article/153184/panasonic_buy_sanyo.html?tk=rss_news

Netflix has expanded the online component of its movie rental service to include any Intel Mac user who volunteers to join in a new public beta.The test version of Watch Instantly is open to any Mac user with both a qualifying Netflix subscription and a system capable of playing videos in Microsoft's Silverlight web plugin.Previously limited just to a controlled rollout, the expanded beta is considered the second phase of testing and comes along with a new wave of movies and TVs added to the service for all viewers.The company cautions that there may be bugs and that the Silverlight version's catalog isn't a one-for-one duplicate of what's available in the regular version of Watch Instantly, which normally requires Internet Explorer on a Windows PC or else a set-top box with Netflix support built-in.The expansion is made possible by Silverlight's inherent support for copy protection regardless of either the operating system or the browser and thus prevents casual rips of the video feed using third-party tools.Mac users have typically been excluded from such services in the past but have gained increasing access in recent months to commercial video services that once required Windows alone, such as Amazon's Video on Demand. Hulu and other free services have often escaped these limits due to their uses of advertising, which lets the Fox/NBC joint project offer video for free. Reference : http://www.appleinsider.com/articles/08/11/02/netflix_opens_web_movie_streams_to_most_mac_users.html

During PDC ‘08, I was passed a note indicating that I should dig deeper into the bits to discover the snazzy new Taskbar. Upon cursory analysis, I found no evidence of such and dismissed the idea as completely bogus. During PDC ‘08, I was passed a note indicating that I should dig deeper into the bits to discover the snazzy new Taskbar. Upon cursory analysis, I found no evidence of such and dismissed the idea as completely bogus.

I got home and starting doing some research on a potentially new feature called Aero Shake when I stumbled upon an elaborate set of checks tied to various shell-related components, including the new Taskbar. Update: Although a newer-looking Taskbar is present, it’s not exactly what you saw at PDC ‘08. For example, the Quicklaunch toolbar still exists, Aero Peek doesn’t work properly, and Jumplists are stale. This is likely why it wasn’t enabled, out of the box, so set your expectations accordingly. To use these, what I call “protected features”, you must meet the following criteria:

- Must be a member of an allowed domain

- wingroup.windeploy.ntdev.microsoft.com

- ntdev.corp.microsoft.com

- redmond.corp.microsoft.com

- Must not be an employee with a disallowed username prefix

- a- (temporary employees)

- v- (contractors/vendors)

Protected Feature Flowchart (click for full) Protected Feature Flowchart (click for full)

As checking against this criteria is potentially expensive, in terms of CPU cycles, the result of the check is cached for the duration of Explorer’s lifetime (per protected feature). The cached value is stored within a variable, space of which is allocated in the image’s initialized data section (.data). Explorer does not initialize these variables at start and checks for a cached result for before performing any checks. I exploited this behavior by setting the initialized value in the image itself to 1 vice 0 to bypass all twelve checks. Why not use a hook to intercept GetComputerNameExW / GetUserNameW? I thought about building a hook to inject into the Explorer process upon start, but I grew concerned that legitimate code in Explorer that uses those functions to perform various legitimate tasks would malfunction. And I was lazy. Can I has too? Plz? Simply download a copy of a tool I whipped up for either x86 or x64 (untested thus far), drop it into your Windows\ directory and execute the following commands as an Administrator in a command prompt window:

- takeown /f %windir%\explorer.exe

- cacls %windir%\explorer.exe /E /G MyUserName:F (replacing MyUserName with your username)

- taskkill /im explorer.exe /f

- cd %windir%

- start unlockProtectedFeatures.exe

After changing the protected feature lock state, you can re-launch the shell by clicking the Launch button.

Screenshot of PDC ‘08 build with new Taskbar

Why did Microsoft do this? I’m not sure why these features went into the main (winmain) builds wrapped with such protection. What are your thoughts?

|

During PDC ‘08, I was passed a note indicating that I should dig deeper into the bits to discover the snazzy new Taskbar. Upon cursory analysis, I found no evidence of such and dismissed the idea as completely bogus.

During PDC ‘08, I was passed a note indicating that I should dig deeper into the bits to discover the snazzy new Taskbar. Upon cursory analysis, I found no evidence of such and dismissed the idea as completely bogus. Protected Feature Flowchart (click for full)

Protected Feature Flowchart (click for full)